How Will Super Alignment Work? Challenges and Criticisms of OpenAI's Approach to AGI Safety & X-Risk

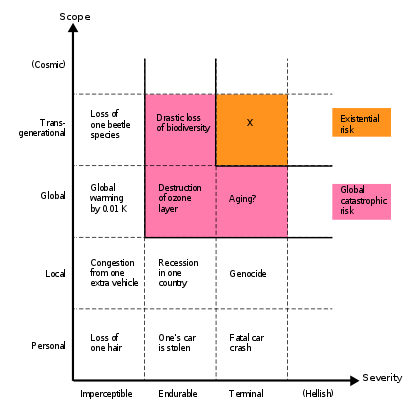

Existential risk from artificial general intelligence - Wikipedia

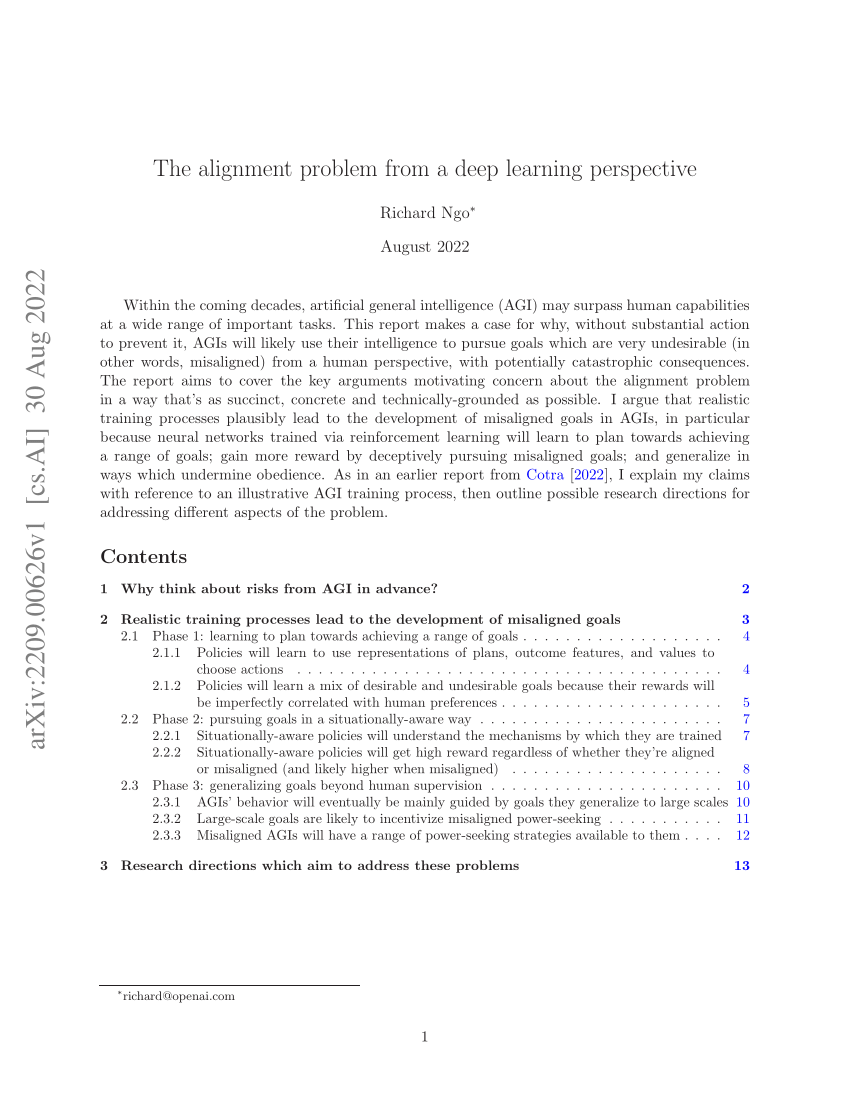

PDF) The alignment problem from a deep learning perspective

Yvonne Tesch on LinkedIn: How Will Super Alignment Work

Timelines are short, p(doom) is high: a global stop to frontier AI development until x-safety consensus is our only reasonable hope — EA Forum

Timeline of the 106-Hour OpenAI Saga, Altman Will Return, New Board Formed

Generative AI VIII: AGI Dangers and Perspectives - Synthesis AI

Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy - ScienceDirect

AI #14: A Very Good Sentence - by Zvi Mowshowitz

OpenAI's Superalignment Initiative: A key step-up in AI Alignment Research

OpenAI Launches Superalignment Taskforce — LessWrong

PDF) The risks associated with Artificial General Intelligence: A systematic review

What is the Control Problem?