What's in the RedPajama-Data-1T LLM training set

RedPajama is “a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens”. It’s a collaboration between Together, Ontocord.ai, ETH DS3Lab, Stanford CRFM, …

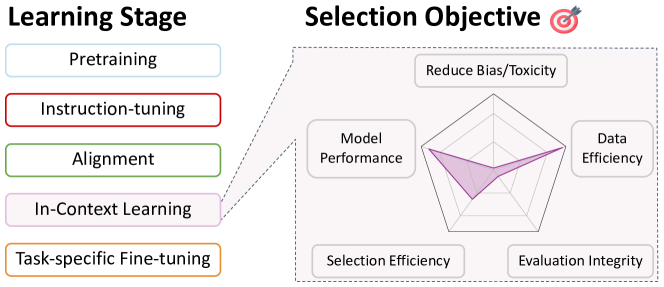

A Survey on Data Selection for Language Models

Web LLM runs the vicuna-7b Large Language Model entirely in your

Ahead of AI #8: The Latest Open Source LLMs and Datasets

What is RedPajama? - by Michael Spencer

Exploring the training data behind Stable Diffusion

Web LLM runs the vicuna-7b Large Language Model entirely in your

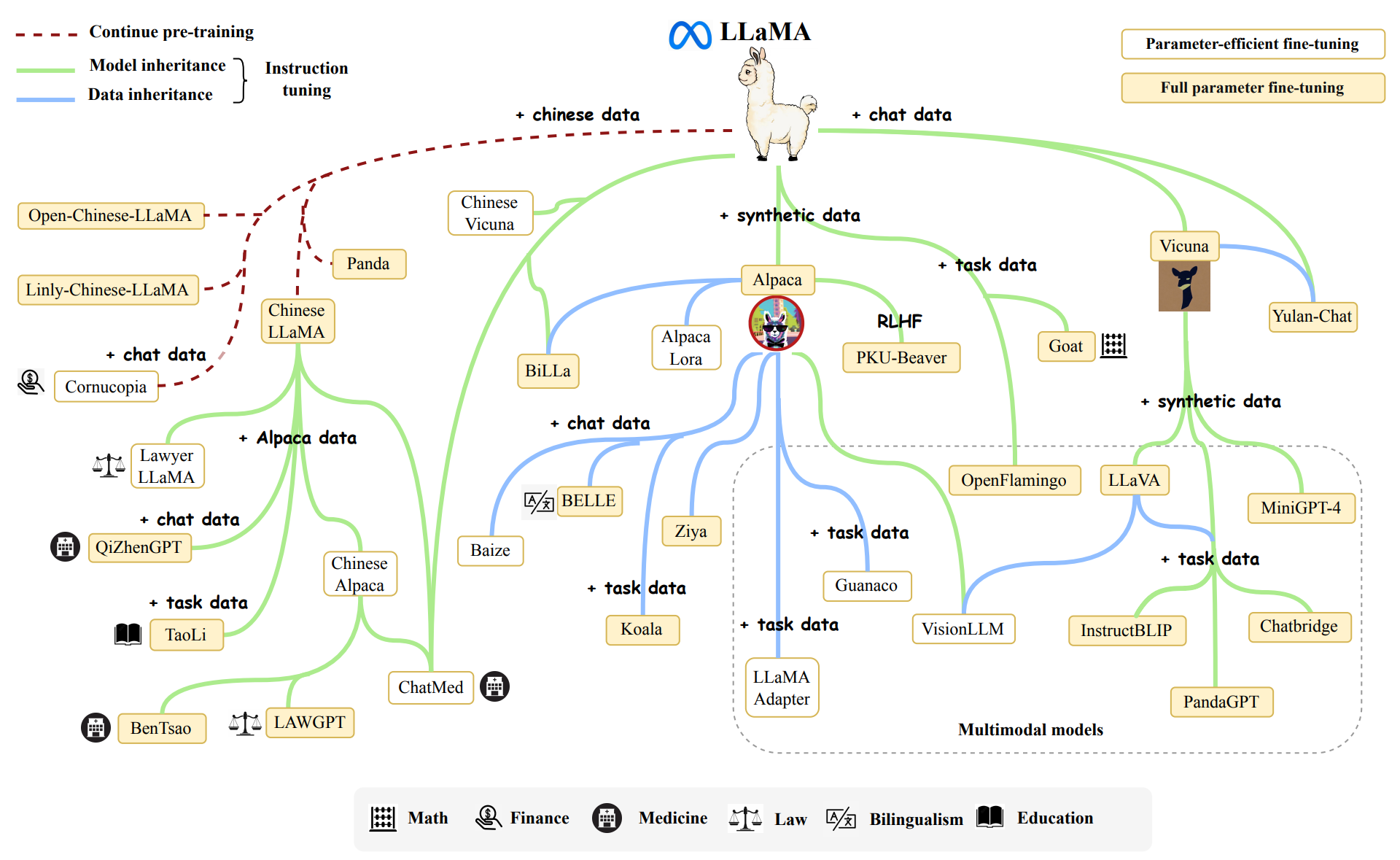

A Comprehensive Overview of Large Language Models

Inside language models (from GPT to Olympus) – Dr Alan D. Thompson

RedPajama: New Open-Source LLM Reproducing LLaMA Training Dataset

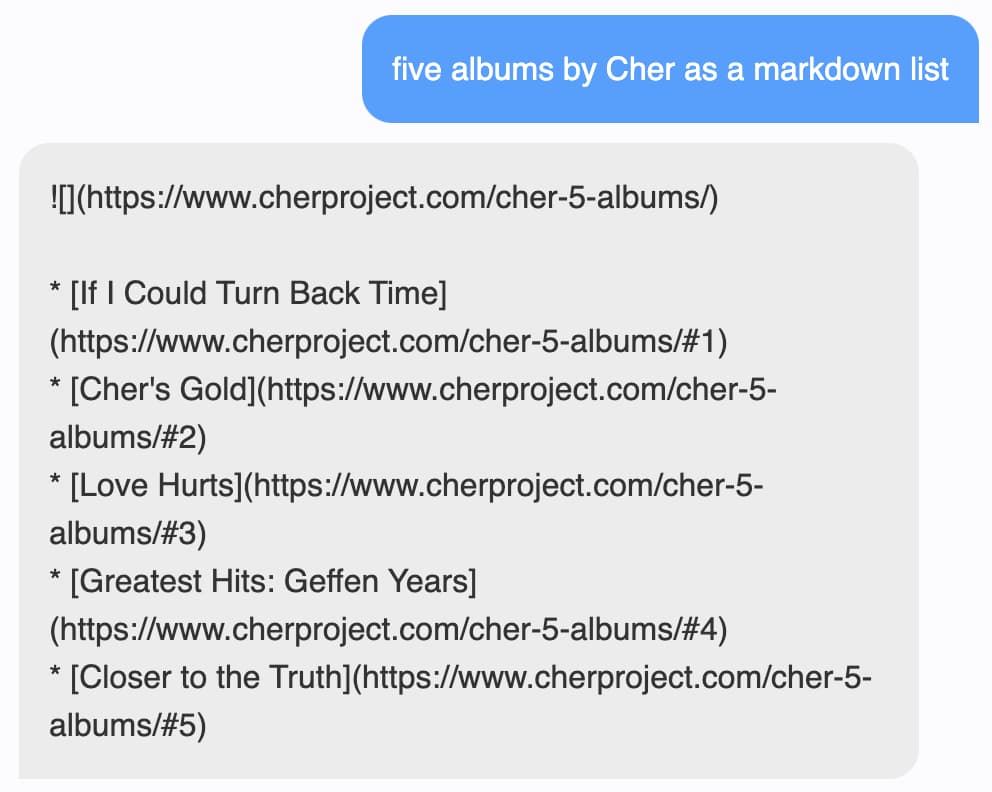

Collecting RLHF data - Argilla 1.26 documentation

Red Pajama: An Open-Source Llama Model

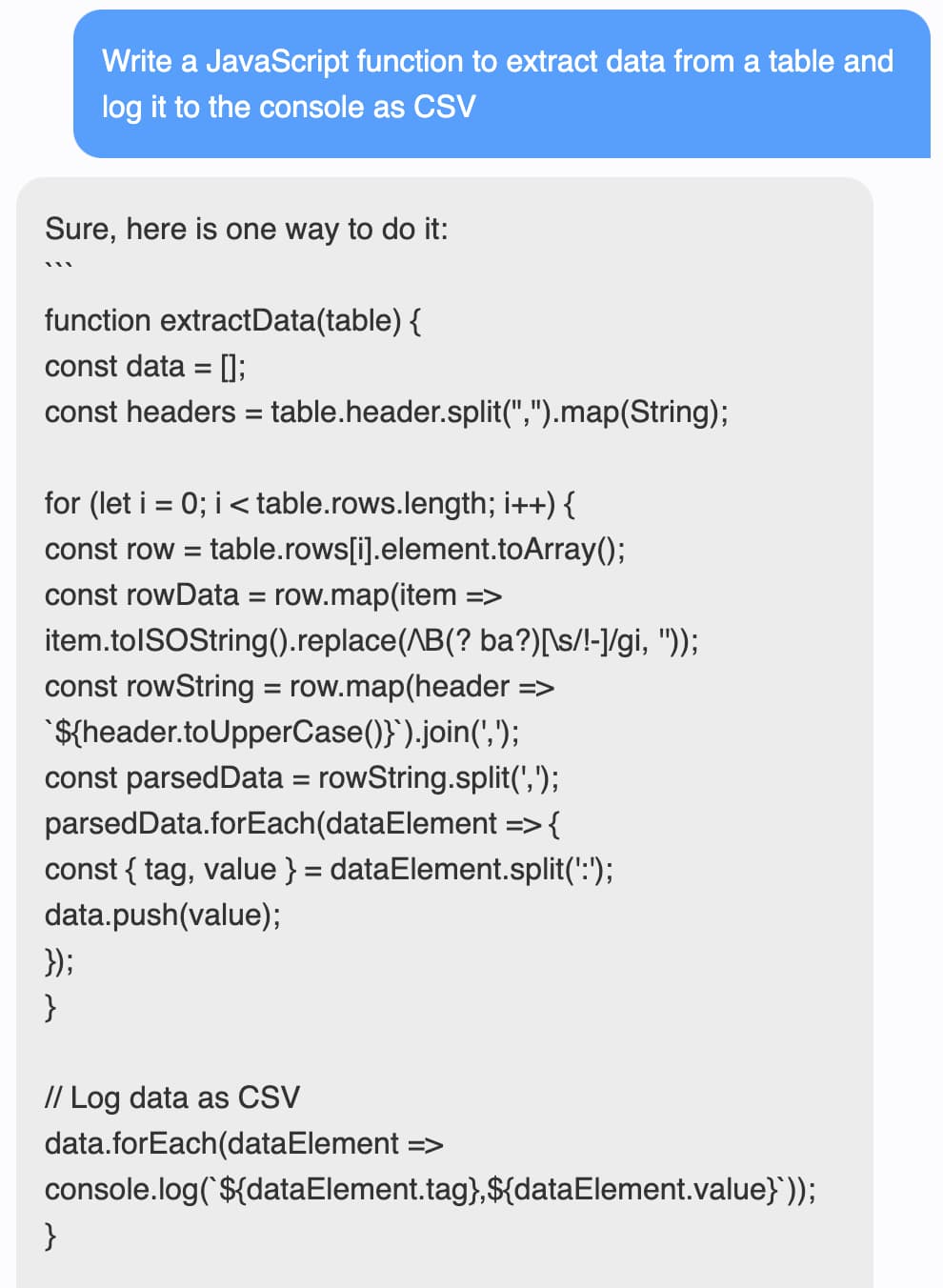

How we built better GenAI with programmatic data development