RedPajama replicates LLaMA dataset to build open source, state-of-the-art LLMs

RedPajama, which creates fully open-source large language models, has released a 1.2 trillion token dataset following the LLaMA recipe.

LLaMA clone: RedPajama – first open-source decentralized AI with open dataset

1. LLM Ingredients: Training Data - Designing Large Language Model Applications [Book]

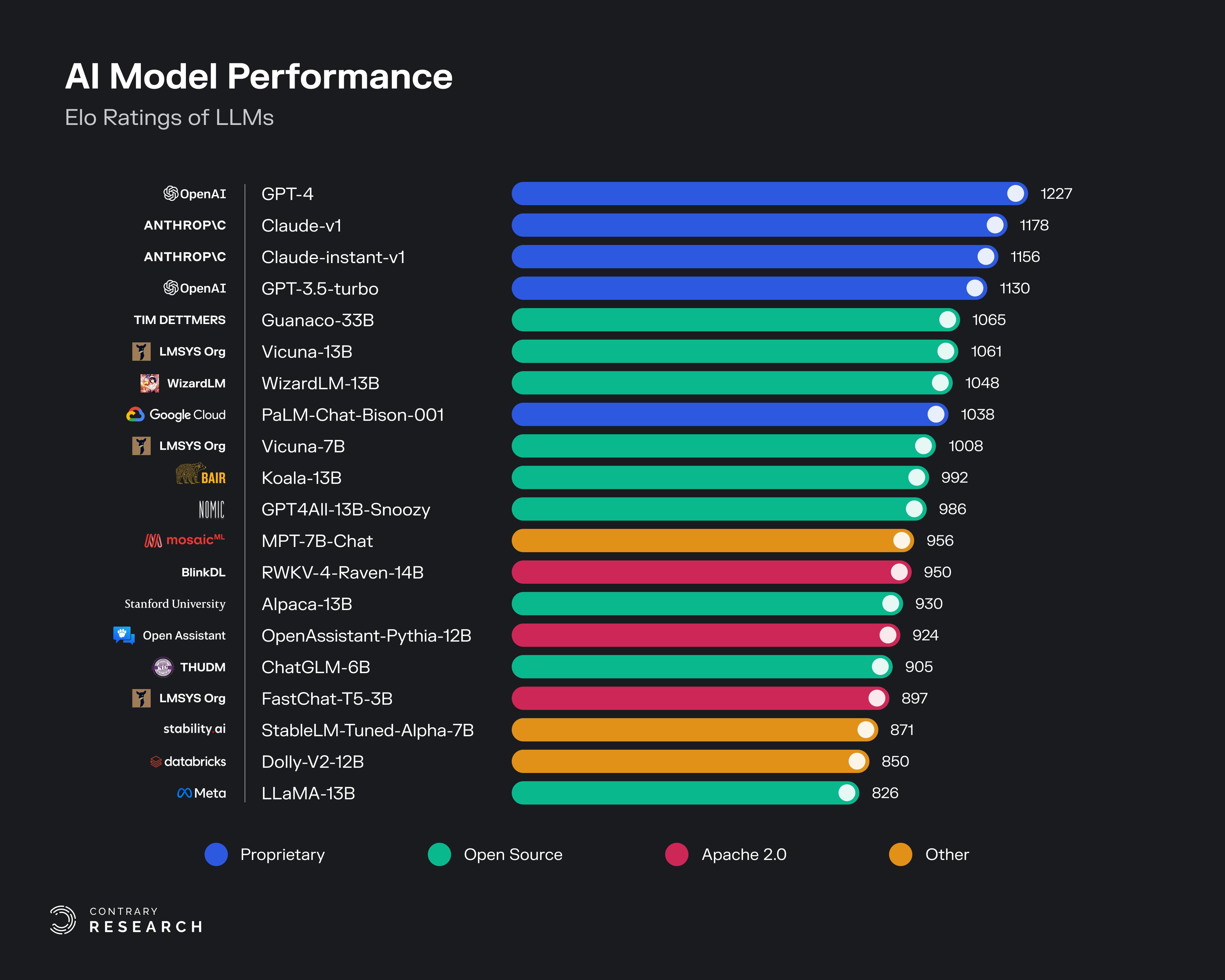

Report: The Openness of AI A Contrary Research Deep Dive

今日気になったAI系のニュース【23/4/24】|shanda

LLLMs: Local Large Language Models

2023 in science - Wikipedia

The data that trains AI is under the spotlight — and even I'm weirded out

N] OpenLLaMA: An Open Reproduction of LLaMA : r/MachineLearning

S_04. Challenges and Applications of LLMs - Deep Learning Bible - 3. Natural Language Processing - Eng.

🎮 Replica News

Best Open Source LLMs of 2024 — Klu

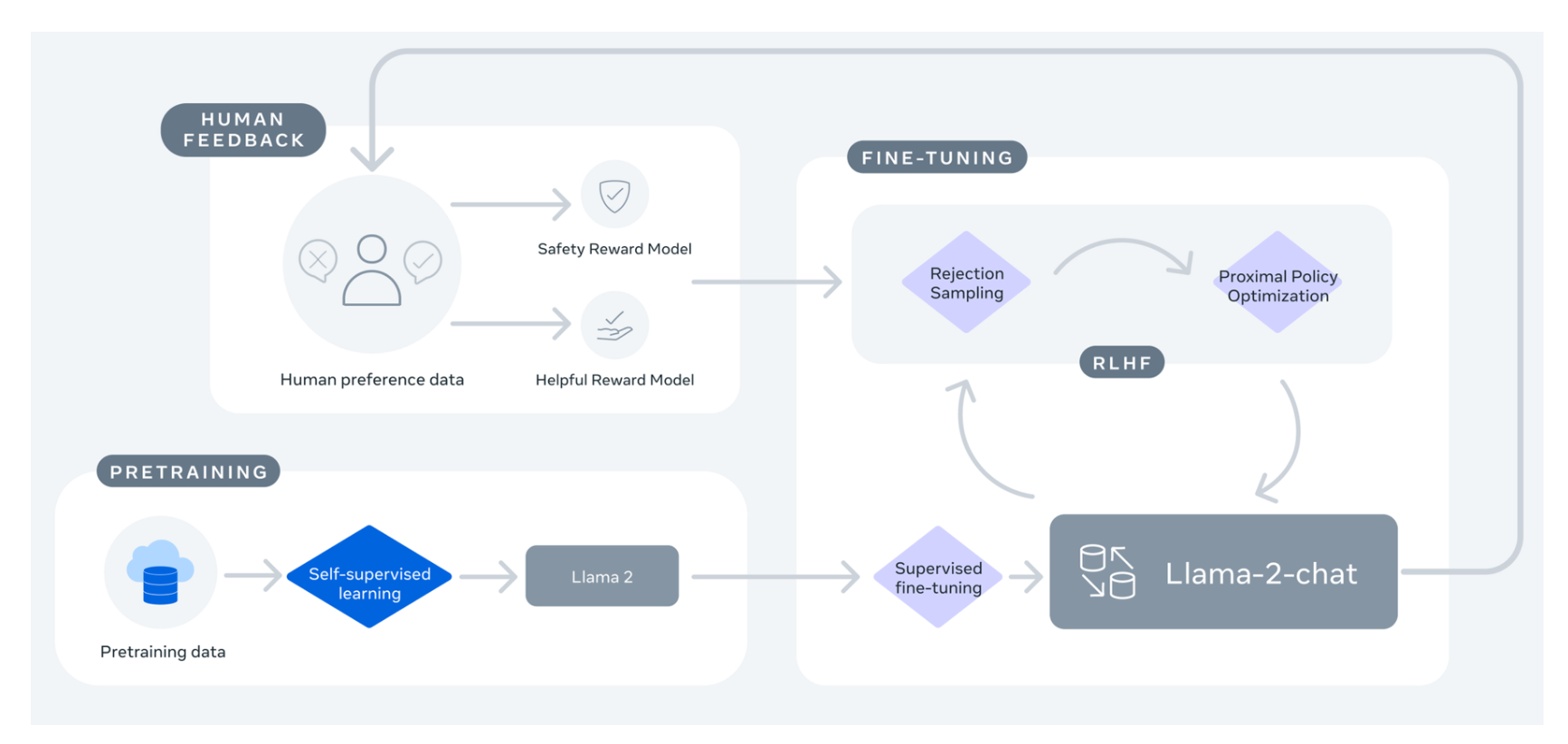

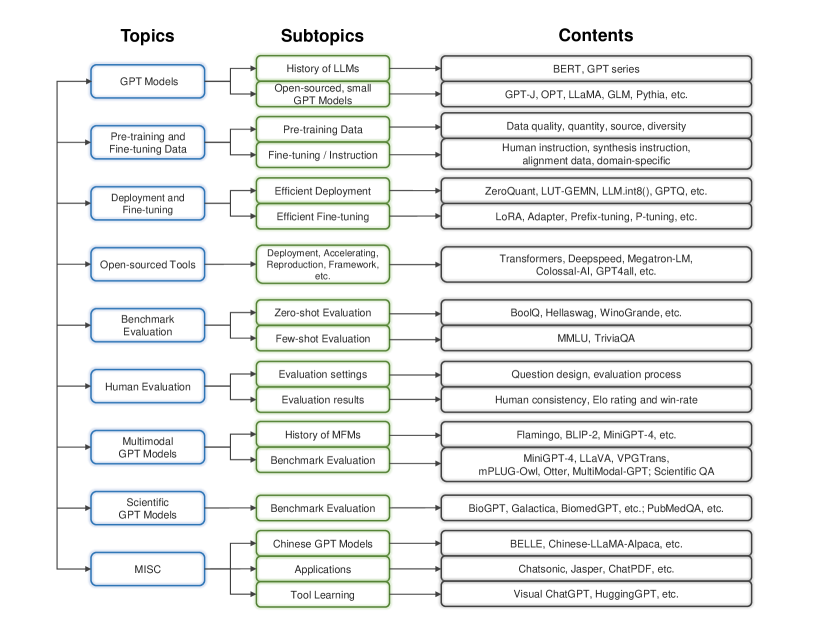

2308.14149] Examining User-Friendly and Open-Sourced Large GPT Models: A Survey on Language, Multimodal, and Scientific GPT Models

🎮 Replica News