BERT-Large: Prune Once for DistilBERT Inference Performance

Compress BERT-Large with pruning & quantization to create a version that maintains accuracy while beating baseline DistilBERT performance & compression metrics.

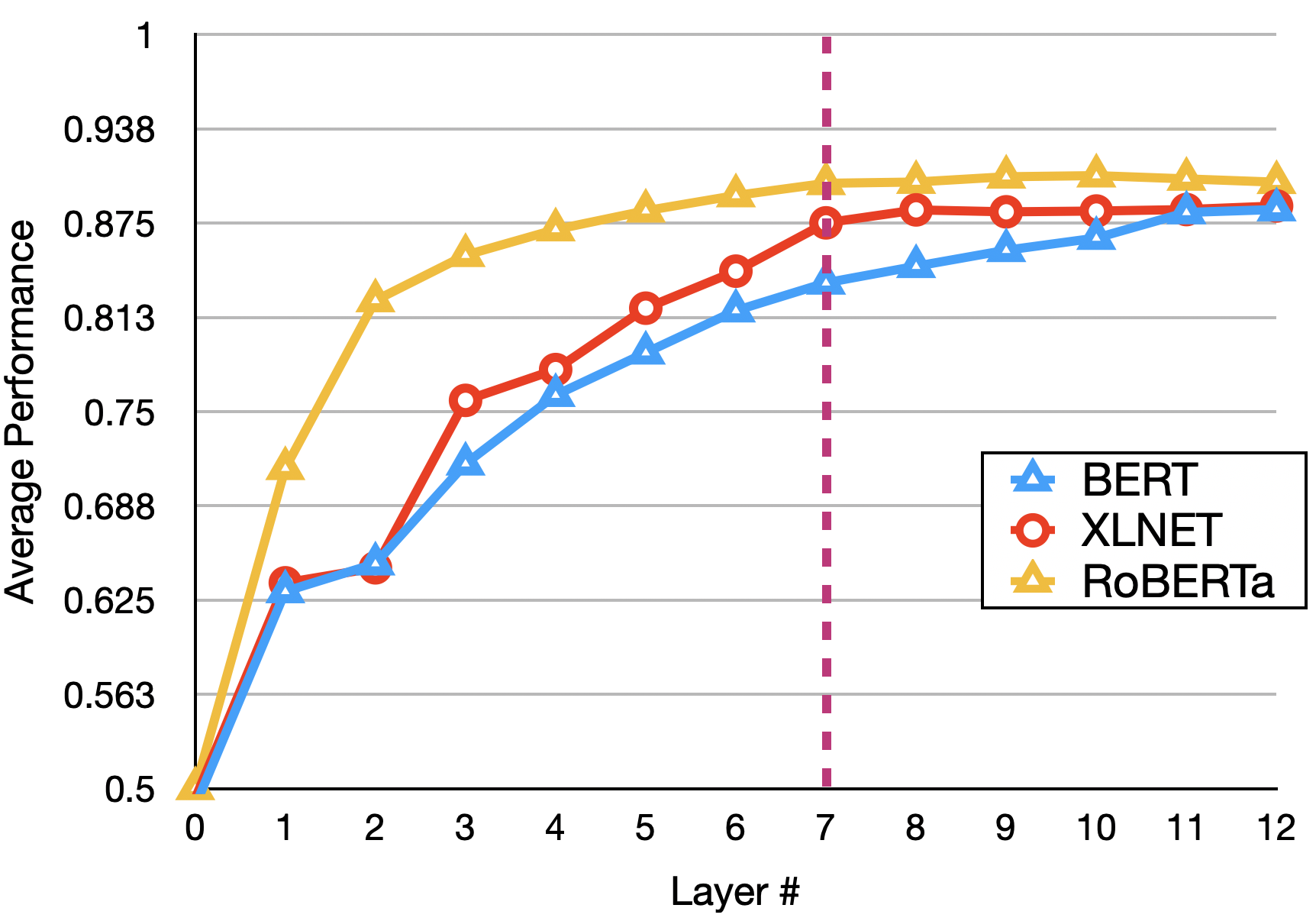

2004.03844] On the Effect of Dropping Layers of Pre-trained Transformer Models

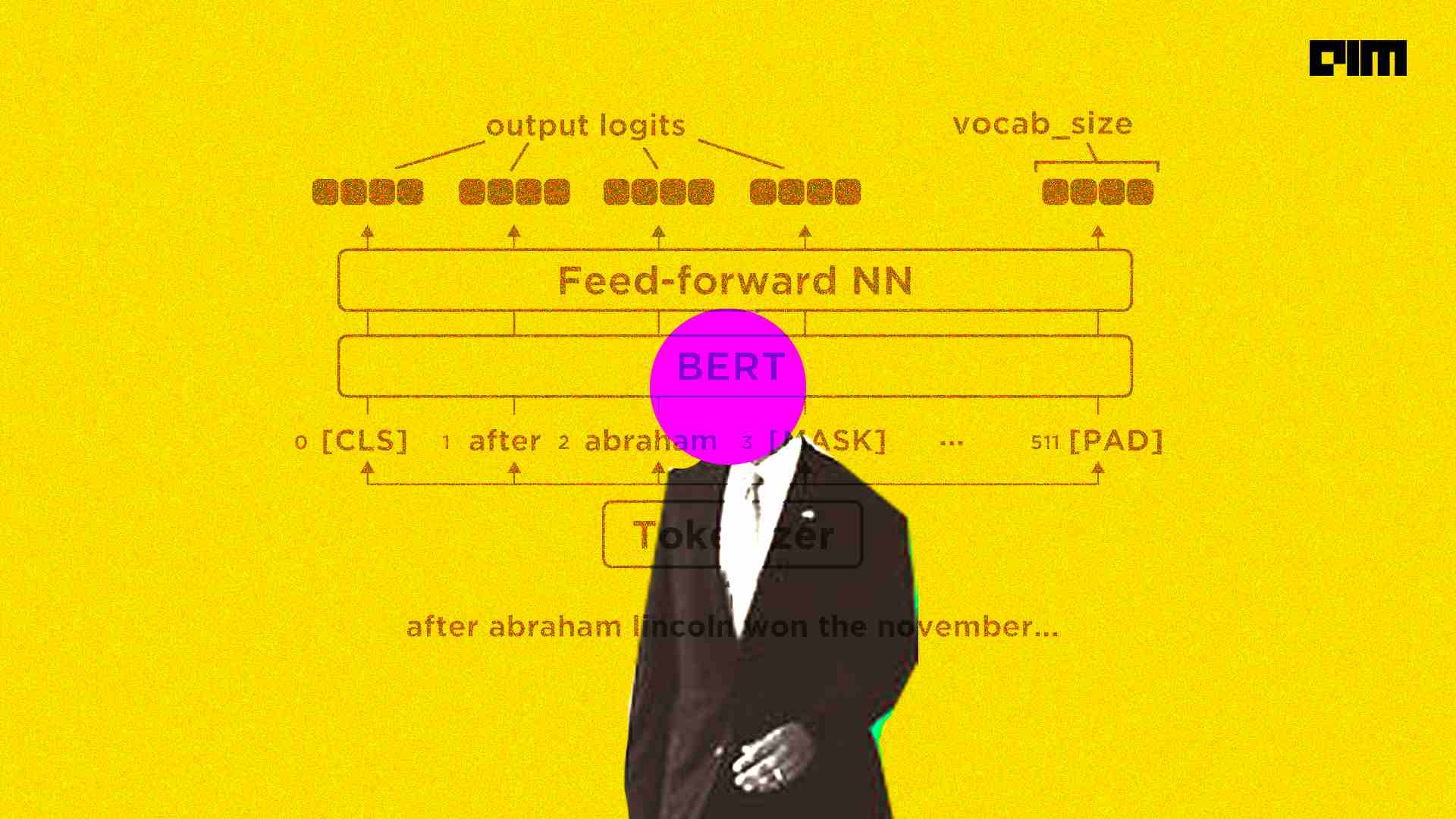

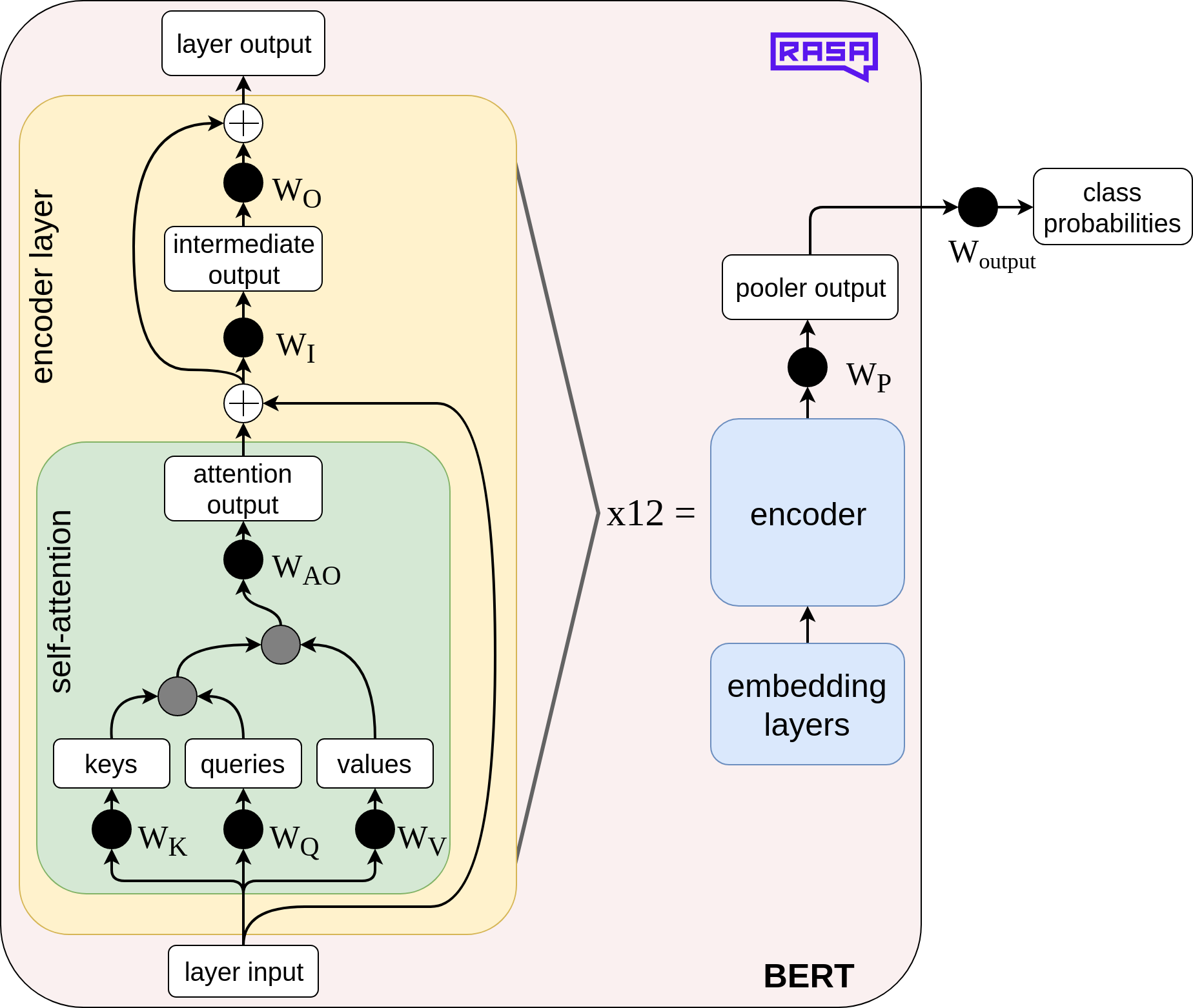

BERT model flowchart. Download Scientific Diagram

Dipankar Das on LinkedIn: Intel Xeon is all you need for AI

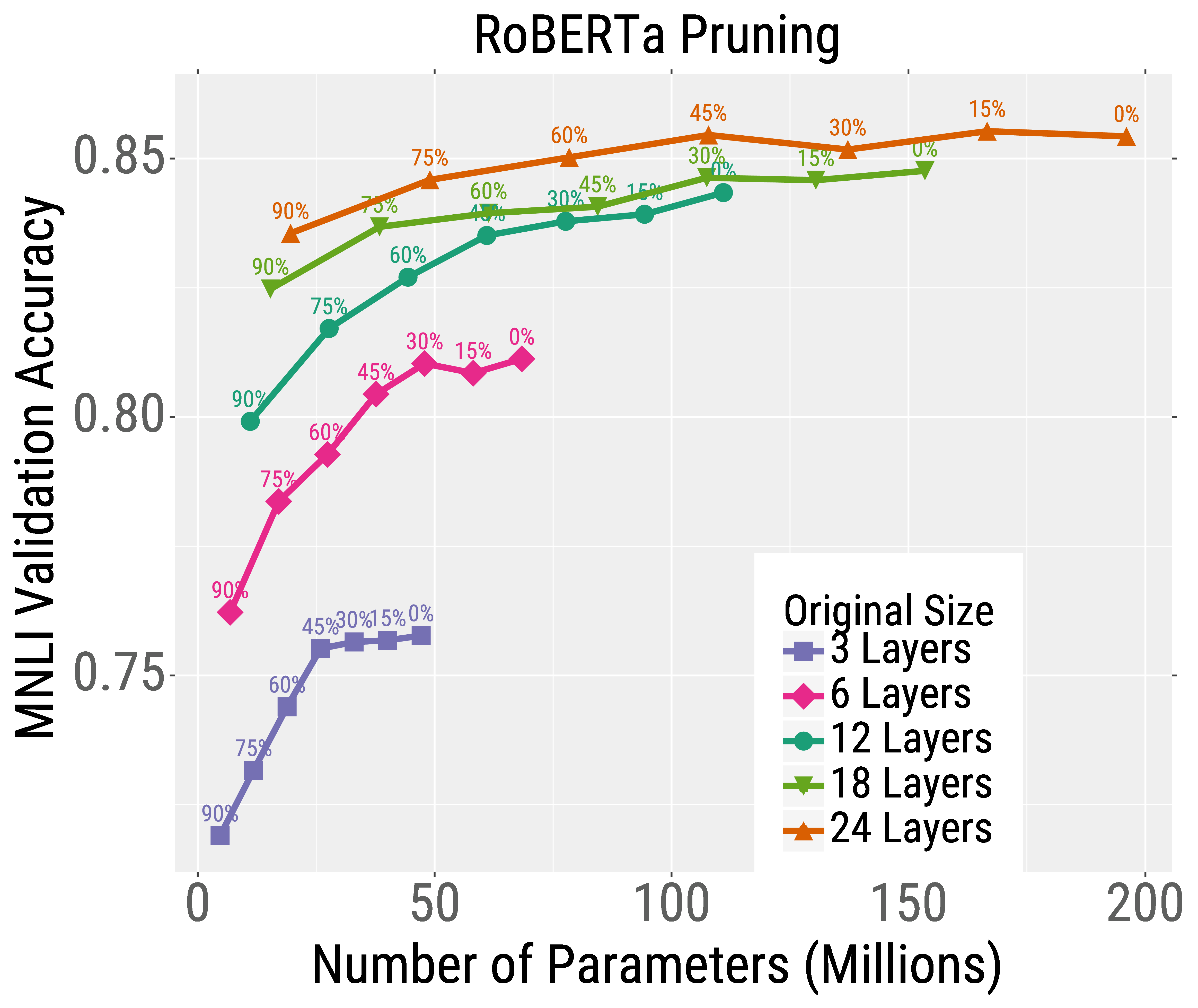

Poor Man's BERT - Exploring layer pruning

Neural Magic open sources a pruned version of BERT language model

Speeding up transformer training and inference by increasing model size - ΑΙhub

Learn how to use pruning to speed up BERT, The Rasa Blog

Large Transformer Model Inference Optimization

Efficient BERT with Multimetric Optimization, part 2

Mark Kurtz on LinkedIn: BERT-Large: Prune Once for DistilBERT

Excluding Nodes Bug In · Issue #966 · Xilinx/Vitis-AI ·, 57% OFF

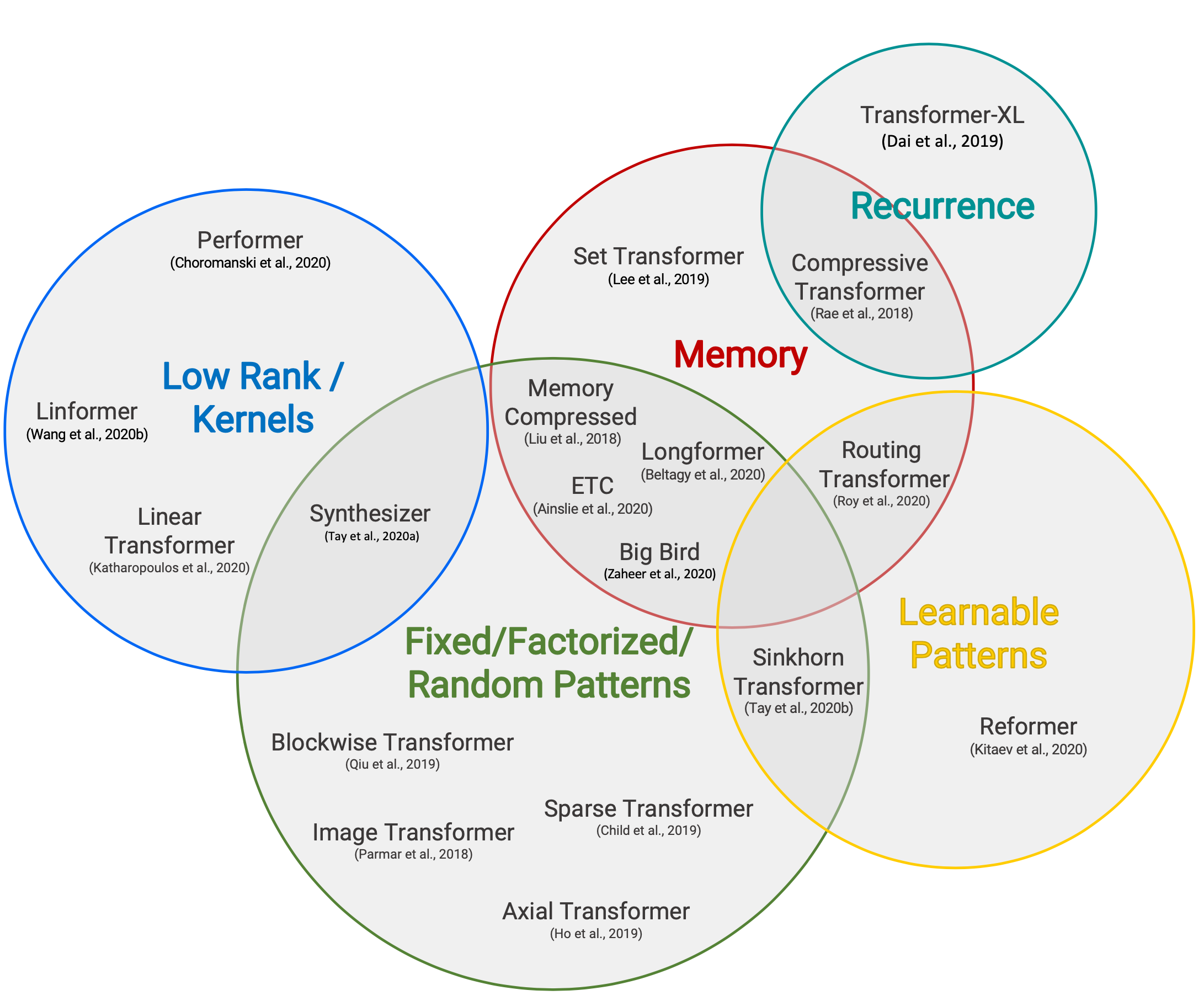

A survey of techniques for optimizing transformer inference - ScienceDirect