DistributedDataParallel non-floating point dtype parameter with

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

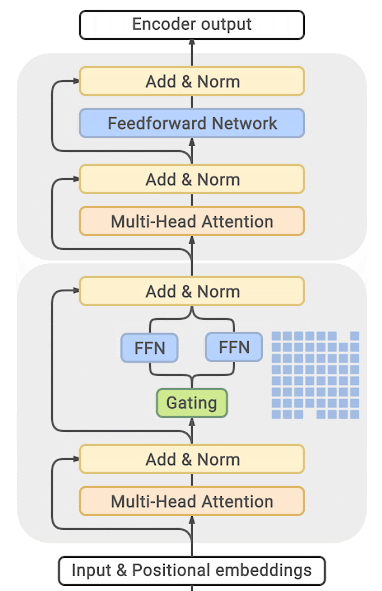

A comprehensive guide of Distributed Data Parallel (DDP), by François Porcher

55.4 [Train.py] Designing the input and the output pipelines - EN - Deep Learning Bible - 4. Object Detection - Eng.

Sharded Data Parallel FairScale documentation

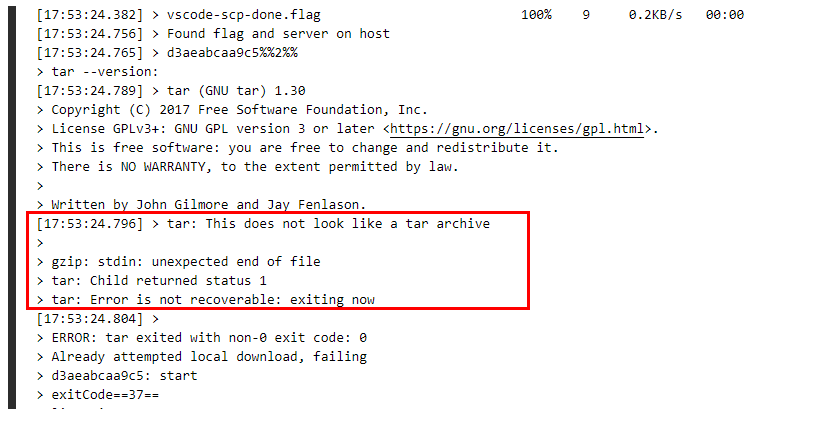

What Do I Do If Error Message Failed to install the VS Code Server or tar: Error is not recoverable: exiting now Is Displayed?_ModelArts_FAQs_Notebook (New Version)_Failures to Access the Development Environment Through

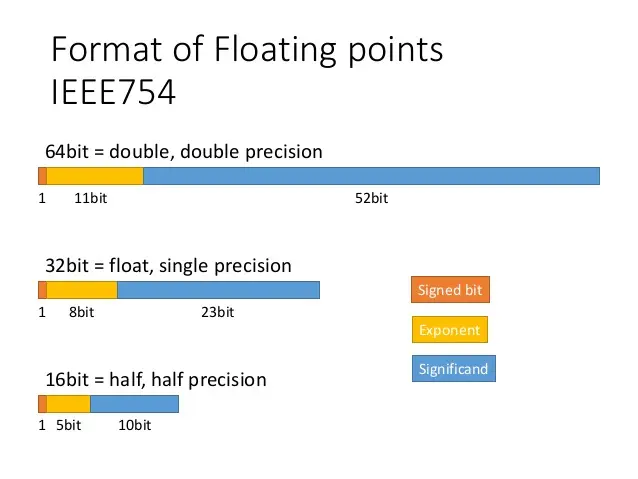

Aman's AI Journal • Primers • Model Compression

/content/images/2022/10/amp.png

torch.nn、(一)_51CTO博客_torch.nn

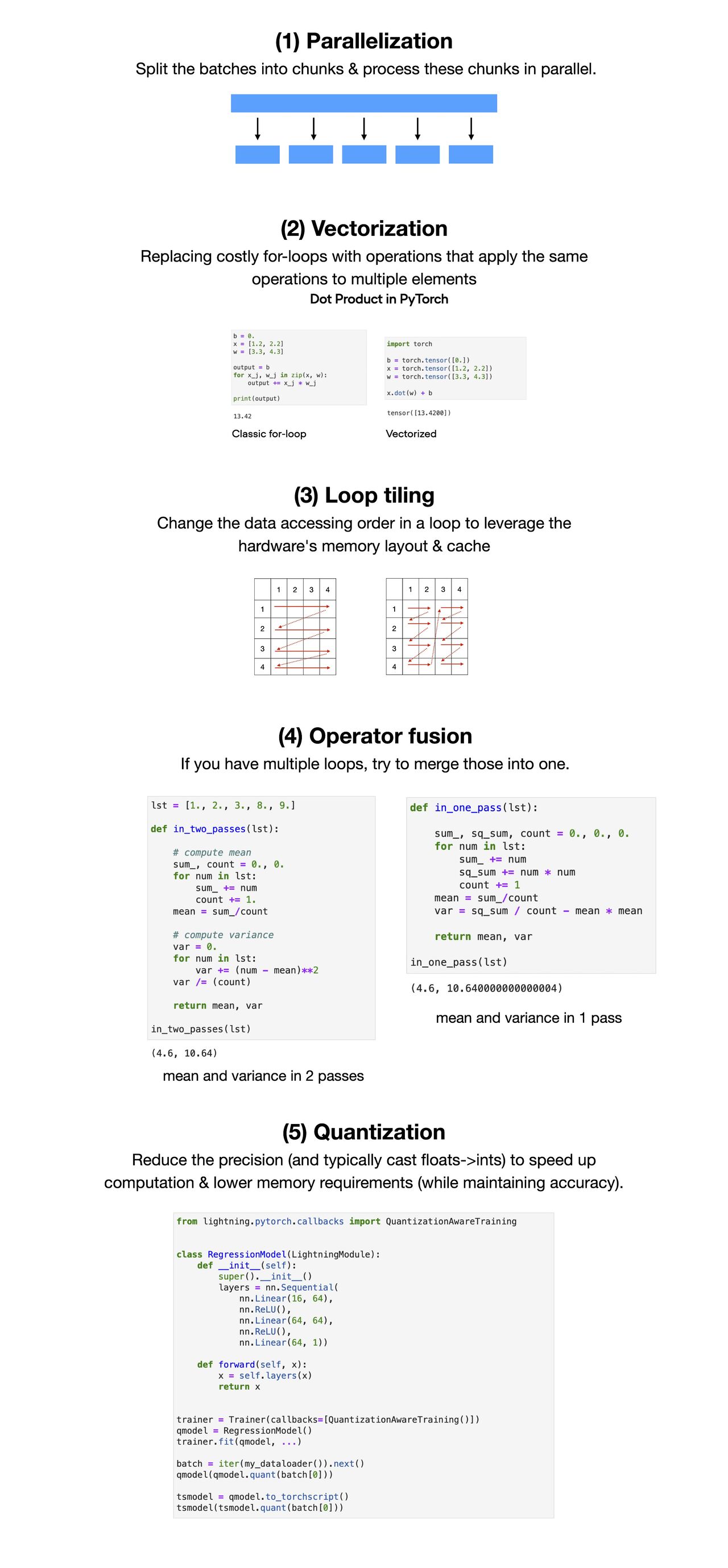

Performance and Scalability: How To Fit a Bigger Model and Train It Faster

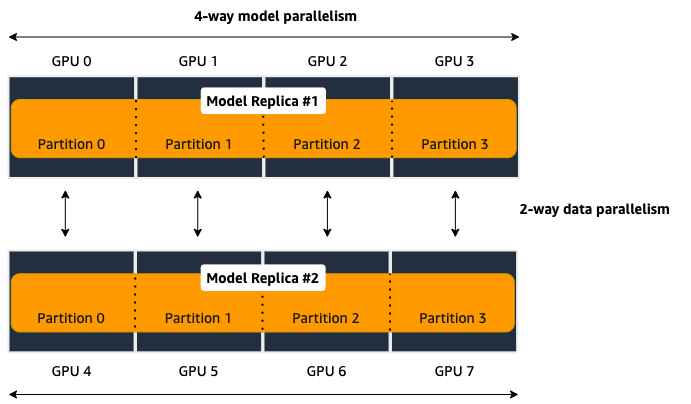

Run a Distributed Training Job Using the SageMaker Python SDK — sagemaker 2.113.0 documentation

A comprehensive guide of Distributed Data Parallel (DDP), by François Porcher

Straightforward yet productive tricks to boost deep learning model training, by Nikhil Verma

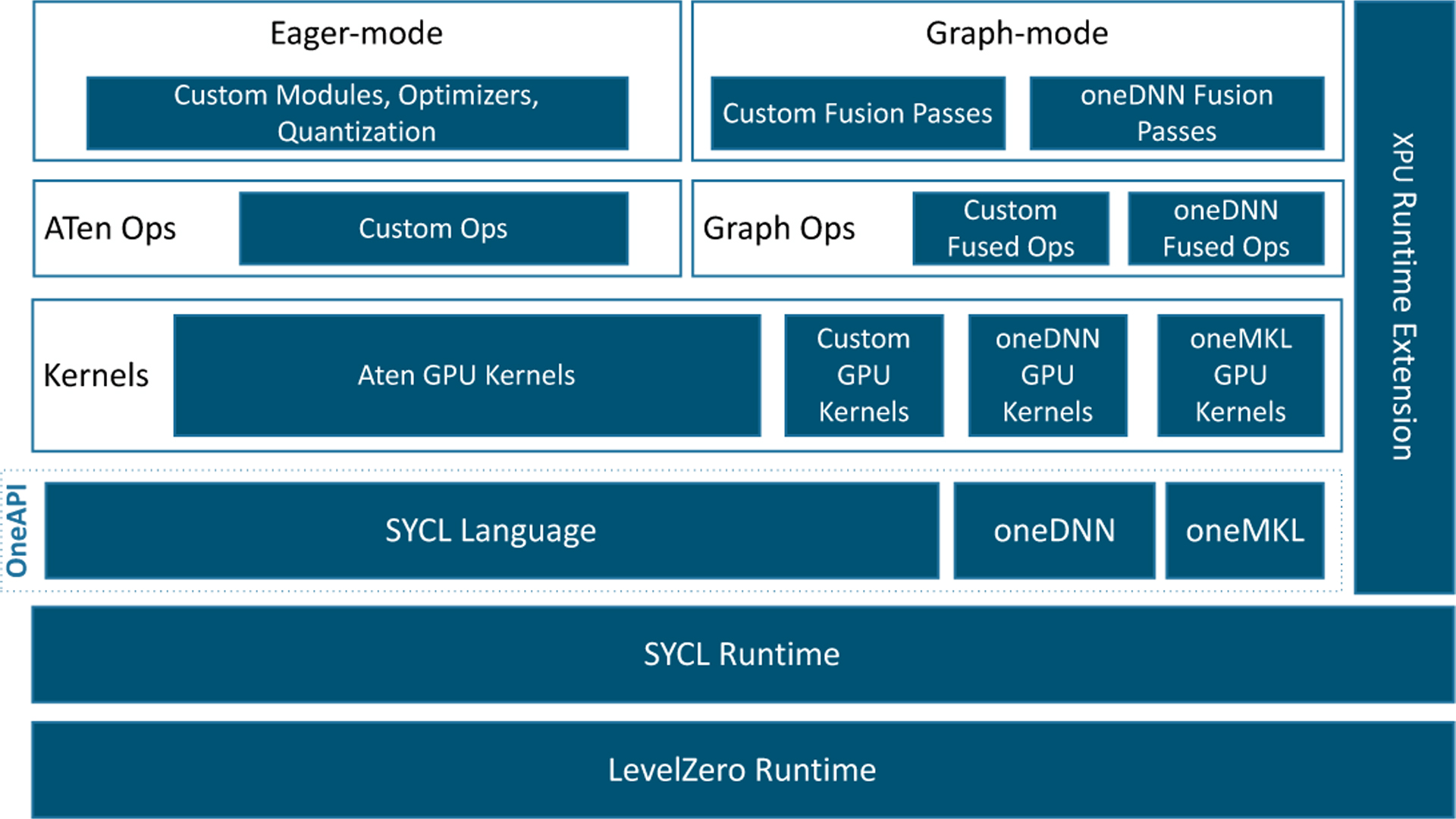

Introducing the Intel® Extension for PyTorch* for GPUs

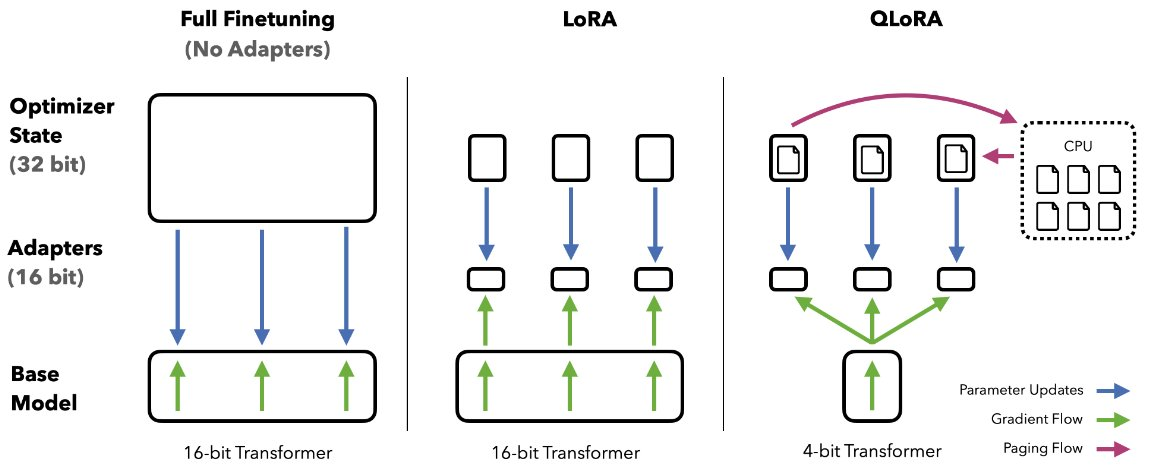

Finetune LLMs on your own consumer hardware using tools from PyTorch and Hugging Face ecosystem

Aman's AI Journal • Primers • Model Compression

Distributed PyTorch Modelling, Model Optimization, and Deployment